Blog 1: Post Production Studio vs. Recording Studio

Knowing the difference makes a big difference in what you are hearing!

On the home page of this website I use the term “post production studio” a lot. What’s the difference between a recording studio and a post-production studio? Well, we know a recording studio is the place where a song, a TV commercial, or a whatever is recorded, and a post production studio is the place where the sound of that recording is edited and enhanced to be of commercial quality.

But are the studios themselves different, or at least should they be? Yes. To explain the biggest difference between the two, let’s first talk about a fundamental audio engineering term: acoustification.

When sound comes out of a pair of speakers, it bounces around a room. It strikes against hard surfaces and gets reflected, sometimes bouncing back and forth several times until its energy is so low it can no longer be heard. During that process, some frequencies get artificially augmented. Some other frequencies get canceled out (a process called polarization) and can no longer be heard. The net result is, depending where you are sitting in the room, some frequencies will sound louder than they came out of the speakers, and some will sound softer or not be heard at all. (I call these distortions "deviation from frequency amplitude fidelity” or DFAF.) The point is that the listener will be hearing something quite different from what actually comes out of the speakers. In audio world, that is a bad thing. In the recording studio, it’s bad because the musicians performing will each be reacting to a different set of sounds. And in the post production studio, the studio’s engineer will not be hearing what the band actually recorded when they create an mp3 or other music platform. So guess what? The person who listens to the song at home on their music system will be hearing a piece of music as distorted by a room. “Geez, I wonder what the song really sounded like?” is a fair question. The difference between the two can be enormous as I’ll discuss below.

In a recording studio you will typically see acoustical padding on walls along with a bunch of mics placed around the place for the different musicians to play or sing into. The proper “acoustification” of the room is important so all the musicians are hearing the same thing all the time wherever they sit (or move to) in the room. There will always be a small difference in frequency amplitude fidelity, but the difference must be minimized as much as the physics of acoustical treatments can achieve.

In a post production studio, it’s a little different. The only space that matters is the point where the music coming out of the speakers intersects the ears of the engineer. Just that one place in the room. And it had better be a close to 100% frequency amplitude fidelity as possible.

So in the case of the post production studio, the importance is not having the entire room maintaining a reasonably high level of DFAF, it’s about having a space the size of a human head in the room maintaining a nearly perfect DFAF. In the last several years of audio mixing and mastering, that goal is achieved with advanced audio technology.

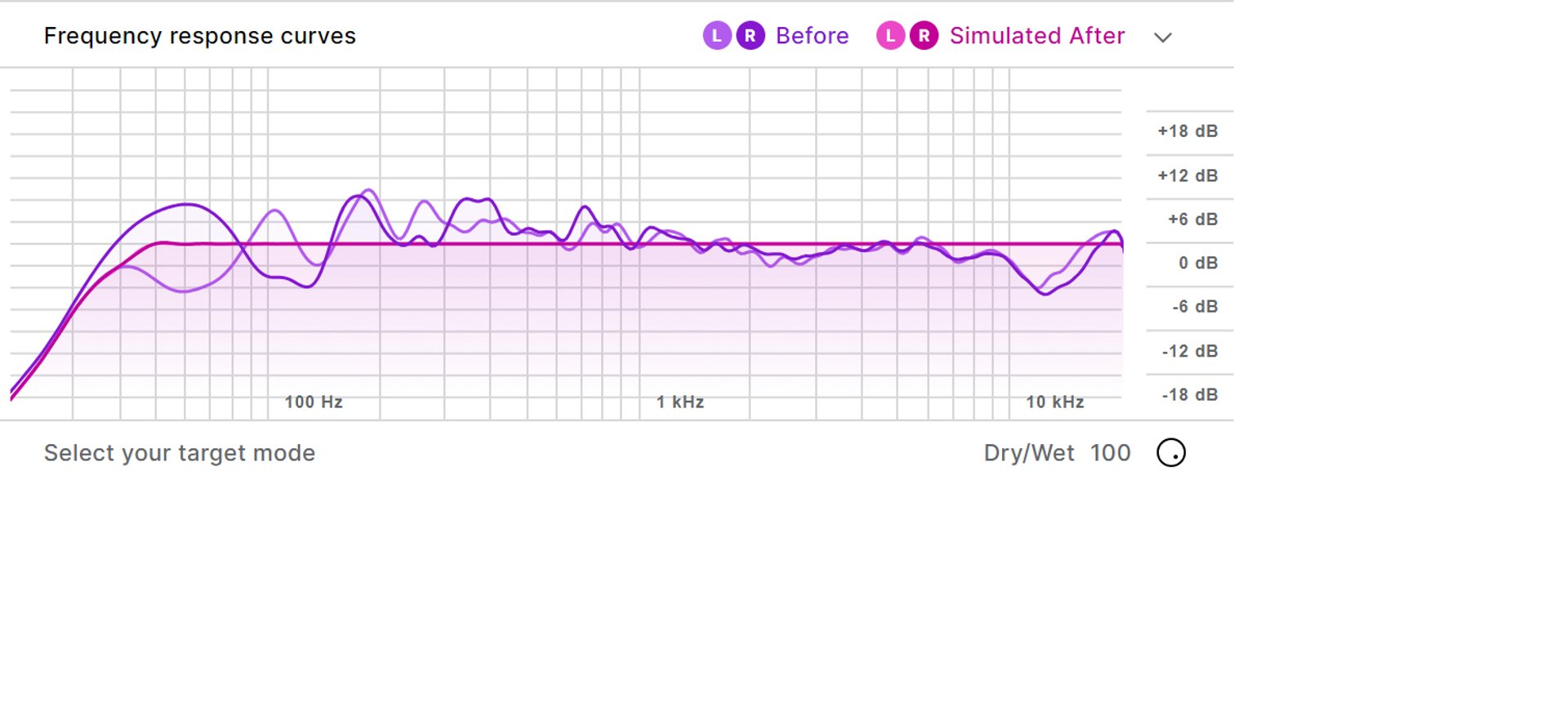

It’s called room calibration software. There are several versions of this cutting edge advancement including Sonarworks’ SoundID Reference, IK Multimedia ARC System 3, and Dirac Live.

I settled onto SoundID Reference a few years ago. Here’s how it works: The software comes with a precision microphone that measures a wide range of musical pitches across the frequency spectrum pointing at 36 different positions in the room from the “sweet spot”, the one spot that is equidistant from each of the monitors in front of the video screens in the room which monitors, in turn, are that same distance from each other. The software “knows” the volume (amplitude) of each frequency that is outputting from the speakers. The microphone then measures the deviation in volume for each of these pitches from the original output volume and adjusts them back to the original. The result is that what the engineer hears as he listens from the sweet spot is at the original volume levels before they were distorted by the room reflections! The engineer is hearing the music's pitches at the voluems they were actually recorded. Yes!

In my early student days, I would spend endless hours listening to youtube videos of exmperienced sound professionals playing examples of music and saying, for example, "Listen how the high end needs turned down”. I couldn’t hear it. What I didn’t know was that despite my own training and the great sound sytem I had put togther, my room was artifically changing the bass I was hearing and causing the highs to sound much softer than they actually were. When I finally applied the room calibration software—Ah, the eureka moment! The sweet heavens opened! I could actually hear what the audio gurus were hearing.

Today, everything that passes through my monitors or headphones is calibrated by SoundID Reference. (There is also a protocol for studio headphone monitors we’ll discuss in another blog).

And when I say everything, I mean everything. When I get my hands on a new release of a song I want to hear, there is only one place in my house I’ll go to hear it. That tiny space where my head is one of the three equidistant points between itself and my two speakers. Ah, music as it was meant to be heard.

That’s how I like listening to my own music. That’s the same experience I want to create for you.

Here is a pic taken in my studio showing most of the hardware I use when doing post production. As I discuss below, however, the most important "ware" in a post production studio is software not hardware. Critical in the software library of a good post production studio is a technology called "room calibration" discussed below.